So I thought that I’d pick on this topic from another thread mainly because of the way that Mouse Gestures have been implemented in Solidworks, IMO, have been done incorrectly. Now this mainly stems from using a wide range of 3D softwares that do have mouse gestures which may required explanation as to why they’ve been implemented in a “bad” way and what needs to be done to help improve them. Also they need to be come much more tightly integrated into the overall environment, not just reserved for initial function only. (will explain further about this below).

As a whole mouse gestures are meant to make is so that you can be on screen more and not have to go over to a menu, toolbar, or feature/property manager. The more that can be customized to your process/liking means a lot and this is an area that I find most CAD softwares to be lacking in comparison to their DCC counterparts.

First: the activation of what it is you want to do should NOT happen until you release the mouse button over the function that you want to do. There have been so many times that slightly touching the a function activates the incorrect thing in very large part that the inner circle/area/ given to move in is quite small.

Second: Property manager functionality - Let’s say you’re wanting to do a Revolve feature, which can be added to the mouse gesture wheel, but then you are forced to input everything into the property manager. Yes the property manager can be undocked and placed anywhere on the screen but this really defeats the purpose of using the whole screen rather than blocking it.

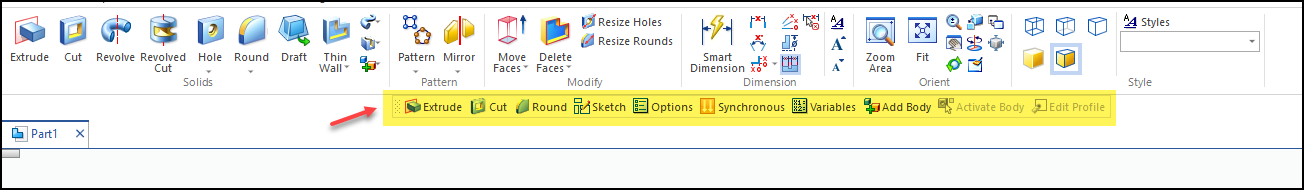

Third: Context tiers… we can have custom mouse gestures based upon if we’re in a sketch, part, assembly, or drawings. But this NEEDS to go so much further. Let’s say you’re in a sketch, want to extrude cut, and imagine that the amount of options within the property manager is placed as a second tier of the mouse gesture…this is a VERY crude video that would be an example of how the UI/UX would look… https://youtu.be/dLw8GXQ3Ok4.

The short is that the UI/UX of the software needs to be much more customizable. Sure I have a ton of hot keys and the likes but this falls far short of how far I’d really want to customize the way I want to operate within the software…thoughts?